Blue Planet Studio/Shutterstock

Recent articles in the business press have discussed the possibility that the U.S. economy is entering a period of higher growth in labor productivity:

“Fed’s Goolsbee Says Strong Hiring Hints at Productivity Growth Burst” (link)

“US Productivity Is on the Upswing Again. Will AI Supercharge It?” (link)

“Can America Turn a Productivity Boomlet Into a Boom?” (link)

In Macroeconomics, Chapter 16, Section 16.7 (Economics, Chapter 26, Section 26.7), we highlighted the role of growth in labor productivity in explaining the growth rate of real GDP using the following equations. First, an identity:

Real GDP = Number of hours worked x (Real GDP/Number of hours worked),

where (Real GDP/Number of hours worked) is labor productivity.

And because an equation in which variables are multiplied together is equal to an equation in which the growth rates of these variables are added together, we have:

Growth rate of real GDP = Growth rate of hours worked + Growth rate of labor productivity

From 1950 to 2023, real GDP grew at annual average rate of 3.1 percent. In recent years, real GDP has been growing more slowly. For example, it grew at a rate of only 2.0 percent from 2000 to 2023. In February 2024, the Congressional Budget Office (CBO) forecasts that real GDP would grow at 2.0 percent from 2024 to 2034. Although the difference between a growth rate of 3.1 percent and a growth rate of 2.0 percent may seem small, if real GDP were to return to growing at 3.1 percent per year, it would be $3.3 trillion larger in 2034 than if it grows at 2.0 percent per year. The additional $3.3 trillion in real GDP would result in higher incomes for U.S. residents and would make it easier for the federal government to reduce the size of the federal budget deficit and to better fund programs such as Social Security and Medicare. (We discuss the issues concerning the federal government’s budget deficit in this earlier blog post.)

Why has growth in real GDP slowed from a 3.1 percent rate to a 2.0 percent rate? The two expressions on the right-hand side of the equation for growth in real GDP—the growth in hours worked and the growth in labor productivity—have both slowed. Slowing population growth and a decline in the average number of hours worked per worker have resulted in the growth rate of hours worked to slow substantially from a rate of 2.0 percent per year from 1950 to 2023 to a forecast rate of only 0.4 percent per year from 2024 to 2034.

Falling birthrates explains most of the decline in population growth. Although lower birthrates have been partially offset by higher levels of immigration in recent years, it seems unlikely that birthrates will increase much even in the long run and levels of immigration also seem unlikely to increase substantially in the future. Therefore, for the growth rate of real GDP to increase significantly requires increases in the rate of growth of labor productivity.

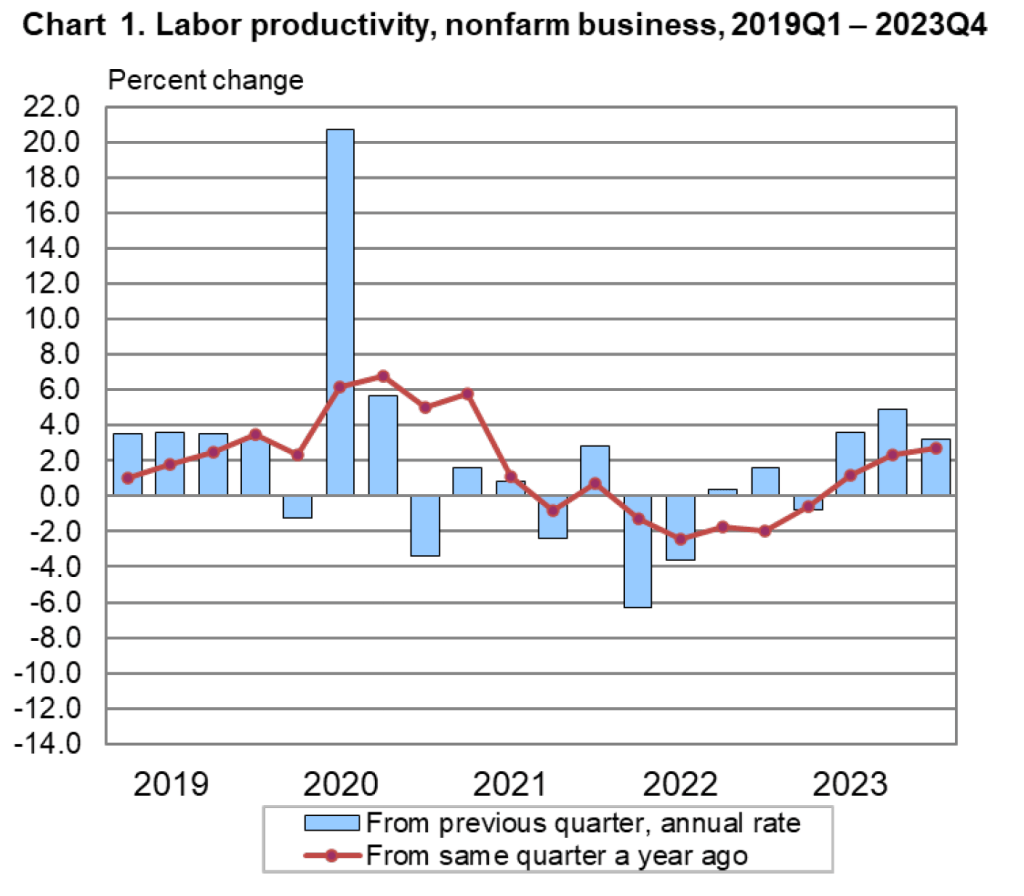

The Bureau of Labor Statistics (BLS) publishes quarterly data on labor productivity. (Note that the BLS series is for labor productivity in the nonfarm business sector rather than for the whole economy. Output of the nonfarm business sector excludes output by government, nonprofit businesses, and households. Over long periods, growth in real GDP per hour worked and growth in real output of the nonfarm business sector per hour worked have similar trends.) The following figure is taken from the BLS report “Productivty and Costs,” which was released on February 1, 2024.

Note that the growth in labor productivity increased during the last three quarters of 2023, whether we measure the growth rate as the percentage change from the same quarter in the previous year or as growth in a particular quarter expressed as anual rate. It’s this increase in labor productivity during 2023 that has led to speculation that labor productivity might be entering a period of higher growth. The following figure shows labor productivity growth, measured as the percentage change from the same quarter in the previous year for the whole period from 1950 to 2023.

The figure indicates that labor productivity has fluctuated substantially over this period. We can note, in particular, productivity growth during two periods: First, from 2011 to 2018, labor productivity grew at the very slow rate of 0.9 percent per year. Some of this slowdown reflected the slow recovery of the U.S. economy from the Great Recession of 2007-2009, but the slowdown persisted long enough to cause concern that the U.S. economy might be entering a period of stagnation or very slow growth.

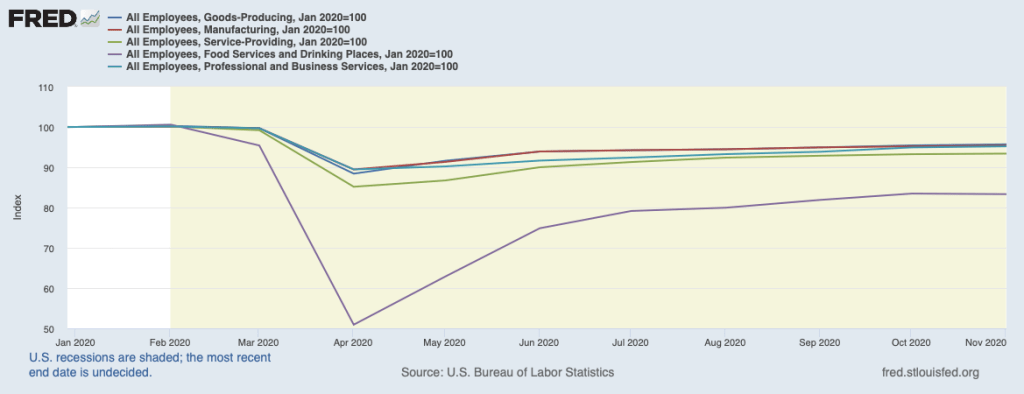

Second, from 2019 through 2023, labor productivity went through very large swings. Labor productivity experienced strong growth during 2019, then, as the Covid-19 pandemic began affecting the U.S. economy, labor productivity soared through the first half of 2021 before declining for five consecutive quarters from the first quarter of 2022 through the first quarter of 2023—the first time productivity had fallen for that long a period since the BLS first began collecting the data. Although these swings were particularly large, the figure shows that during and in the immediate aftermath of recessions labor productivity typically fluctuates dramatically. The reason for the fluctuations is that firms can be slow to lay workers off at the beginning of a recession—which causes labor productivity to fall—and slow to hire workers back during the beginning of an economy recovery—which causes labor productivity to rise.

Does the recent increase in labor productivity growth represent a trend? Labor productivity, measured as the percentage change since the same quarter in the previous year, was 2.7 percent during the fourth quarter of 2023—higher than in any quarter since the first quarter of 2021. Measured as the percentage change from the previous quarter at an annual rate, labor productivity grew at a very high average rate of 3.9 during the last three quarters of 2023. It’s this high rate that some observers are pointing to when they wonder whether growth in labor productivity is on an upward trend.

As with any other economic data, you should use caution in interpreting changes in labor productivity over a short period. The productivity data may be subject to large revisions as the two underlying series—real output and hours worked—are revised in coming months. In addition, it’s not clear why the growth rate of labor productivity would be increasing in the long run. The most common reasons advanced are: 1) the productivity gains from the increase in the number of people working from home since the pandemic, 2) businesses’ increased use of artificial intelligence (AI), and 3) potential efficiencies that businesses discovered as they were forced to operate with a shortage of workers during and after the pandemic.

To this point it’s difficult to evaluate the long-run effects of any of these factors. Wconomists and business managers haven’t yet reached a consensus on whether working from home increases or decreases productivity. (The debate is summarized in this National Bureau of Economic Research Working Paper, written by Jose Maria Barrero of Instituto Tecnologico Autonomo de Mexico, and Steven Davis and Nicholas Bloom of Stanford. You may need to access the paper through your university library.)

Many economists believe that AI is a general purpose technology (GPT), which means that it may have broad effects throughout the economy. But to this point, AI hasn’t been adopted widely enough to be a plausible cause of an increase in labor productivity. In addition, as Erik Brynjolfsson and Daniel Rock of MIT and Chad Syverson of the University of Chicago argue in this paper, the introduction of a GPT may initially cause productivity to fall as firms attempt to use an unfamiliar technology. The third reason—efficiency gains resulting from the pandemic—is to this point mainly anecdotal. There are many cases of businesses that discovered efficiencies during and immediately after Covid as they struggled to operate with a smaller workforce, but we don’t yet know whether these cases are sufficiently common to have had a noticeable effect on labor productivity.

So, we’re left with the conclusion that if the high labor productivity growth rates of 2023 can be maintained, the growth rate of real GDP will correspondingly increase more than most economists are expecting. But it’s too early to know whether recent high rates of labor productivty growth are sustainable.